Event-based Enhanced: A Joint Detection Framework in Autonomous Driving

Published in IEEE International Conference on Multimedia and Expro (ICME), 2019

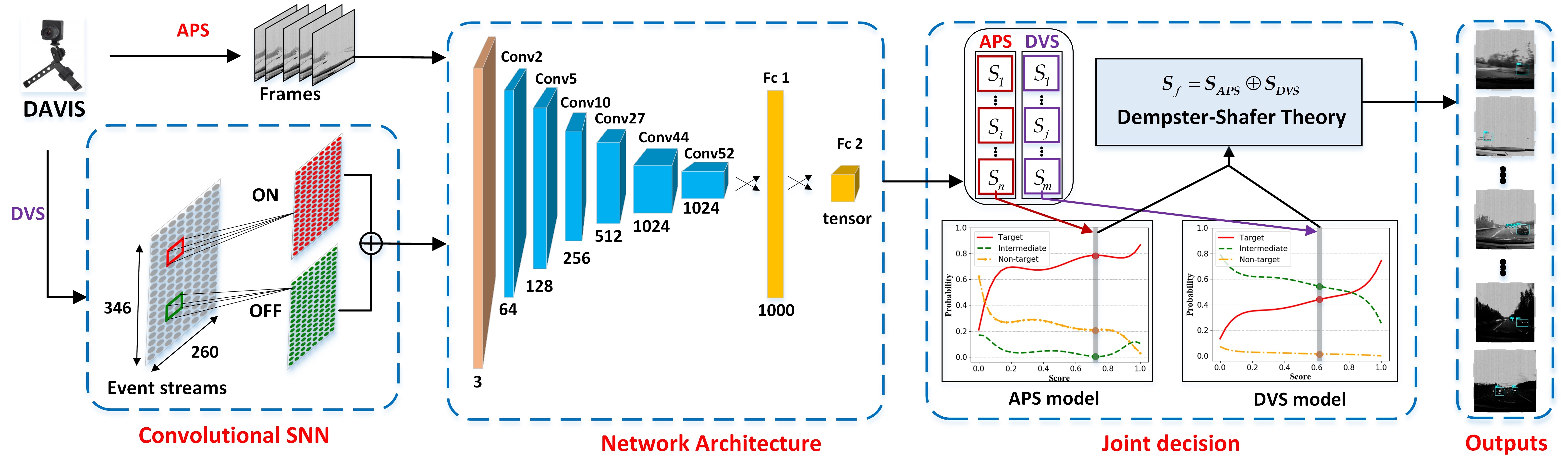

Abstract. Due to the high-speed motion blur and low dynamic range, conventional frame-based cameras have encountered an important challenge in object detection, especially in autonomous driving. Event-based cameras, by taking the advantages of high temporal resolution and high dynamic range, have brought a new perspective to address the challenge. Motivated by this fact, this paper proposes a joint framework combining event-based and frame-based vision for vehicle detection. Specially, two separate event-based and framebased streams are incorporated into a convolutional neural network (CNN). Besides, to accommodate the asynchronous events from event-based cameras, a convolutional spiking neural network (SNN) is utilized to generate visual attention maps so that two streams can be synchronized. Moreover, Dempster-Shafer theory is introduced to merge two outputs from CNN in a joint decision model. The experimental results show that the proposed approach outperforms the state-of-theart methods only using frame-based information, especially in fast motion and challenging illumination conditions.

Reference:

- Jianing Li, Siwei Dong, Zhaofei Yu, Yonghong Tian, Tiejun Huang, “Event-based Enhanced: A Joint Detection Framework in Autonomous Driving”, IEEE International Conference on Multimedia and Expro (ICME), 2019.